views

The Annual Status of Educational Report (ASER) 2017 brought to light the Beyond Basics survey conducted on students between the age group of 14-18. Founder president of the Centre for Civil Society, Parth J Shah, demands that the entire data be uploaded on public domain as a lot of it is lost in processing.

Talking to News18's Eram Agha, Shah explained the various stages of data, what is lost in processing and data models practised worldwide for evidence-based policy:

You went on a silent protest when ASER unveiled its 2017 report because they put up processed data. Why?

Data is first cleaned off incorrect data points, missing data points and then it is verified that actual people answered the survey and that their answers were accurate, fully recorded. Then it is anonymised, that is if there is any personal information or information that can identify individuals, organizations involved in the survey. The cleaned, verified and anonymised data is called raw data or also analysis-ready data. This is the data that should be made available to the public so that they can do their own analysis.

The National Sample Survey Office (NSSO) and Unified District Information System for Education (U-DIES) provide input data and put it all in public domain. While the surveys done by National Achievement Survey (NAS) and ASER only present the processed data. What do we lose in processed data?

What NAS and ASER give out are processed data or results of their analysis. NAS will give states a ranking based on grade 5 students, evaluated on their math score. Let’s say NAS has given grade 5 to 1 lakh students in government schools across all the states, it would then give an average math score in each state. This score is then used to rank the states from best performing to the worst performing. What the public gets is the average math score of grade 5 students in each state in one table. With this data the best one can do is to rank the states or find out the gap between two states (for example, Rajasthan’s score is 60 out of 100 and Kerala scored 85, so the difference is 25).

Sometimes, the collecting organisation will provide data split by gender, by caste, by school infrastructure (boundary wall, pucca building, drinking water facility) etc. Often, much more data is collected than what is put in public domain. The reasons for not putting them up may be varied, from the organisation not seeing any value in them or to the data undermining the claims made in the report.

Ideally, what we should be given is the complete data collected, regardless of whether the collecting organisation uses that data in their publication or does not see any value in them. Also, it should be given not in aggregates but at the unit level at which it was collected.

How many stages are there in data processing?

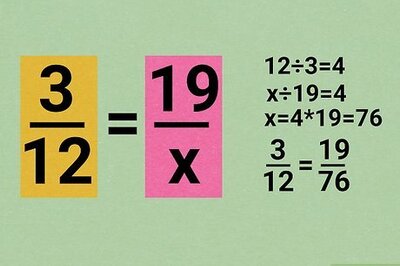

Data processing is simply the conversion of raw data to meaningful information through a process. It starts with a hypothesis or question that the survey needs to answer. Then questionnaire design, sample of the population to be surveyed, the technology to be used, data compilation, data cleaning and cross verification needs to take place, after which the data is analysed and published.

There are six stages – ‘Collection’, which is the first stage of the cycle, should ensure that data gathered is both defined and accurate so that subsequent decisions based on the findings are valid. Next is, ‘Preparation’, which is the manipulation of data into a form suitable for further analysis and processing. It is about constructing a dataset from one or more data sources to be used for further exploration and processing. Then comes, ‘Input’, which is the task where verified data is coded or converted into machine-readable form so that it can be processed through a computer. This is followed by ‘Processing’, ‘Output and Interpretation’, where the processed information is transmitted to the user. The final stage is ‘Storage’, where data, instruction and information are held for future use.

What is the significance of the input data as collected by NSSO and DISE? Is the input data inter-linked to the outcome data as compiled by NAS and ASER? Do the two forces together give a complete picture?

Yes. Input data talks about what is important for outcomes. They are not linked but researchers, if they have full access to both data, can conduct various types of analyses to see the correlation between input and outcome.

Do we need to follow successful models of data results from other parts of the world? Are there success stories we can emulate in spotting the problem and formulating solutions?

Almost all western countries provide detailed school-level data in terms of input as well as outcome. This information helps stakeholders: parents, policymakers, larger public, school leaders and teachers in making important decisions on education.

This allows parents to choose a school that is right for their children. If parents begin to shift out then that is a clear signal that school is not performing well. If a school loses students then it usually also impacts the funding of the school. The policymakers have a clear picture of which schools are doing well and which ones need more attention. Helping parents make informed choice should be one the goals of government and data.

(Author is founder president of the Centre for Civil Society. Views are personal)

Comments

0 comment