views

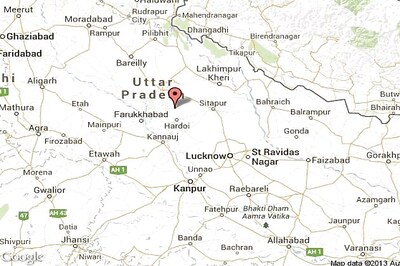

In a country like India, where internet connections aren’t the strongest everywhere, the remote work/study culture has brought with it an issue of poor quality video calls to many people of the country. Given that tech companies are aware of this issue in not just India, but other parts of the world, companies like Google use compression techniques to deliver the best possible video and audio quality during video calls. Google is now testing a new codec that aims to significantly improve the audio quality on weak network connections.

The Google AI team has detailed its new high-quality low-bitrate speech codec that they have named “Lyra.” Like other parametric codecs like it, Lyra’s basic architecture also involves extracting speech attributes (also known as “features”) in the form of log mel spectograms that are then compressed, transmitted over the network, and then recreated on the other end using a generative model. However, unlike other more traditional codecs, Lyra uses a new high-quality audio generative model that not only extracts verbal parameters but is also able to reconstruct speech using minimal data.

The new model, according to a Google blog post, is built on Google’s previous work on WaveNetEQ, the company’s model-based packet-loss-concealment system currently used in Google Duo. The company says that the new approach has made Lyra on par with the state-of-the-art waveform codecs in many streaming and communication platforms today. The benefit Lyra has over these waveform codecs is that Lyra doesn’t send over the signal sample-by-sample, which requires higher bitrate. It, instead, uses a “cheaper recurrent generative model” that works “at a lower rate” but generates multiple signals at different frequency ranges in parallel that are later combined “into a single output signal at the desired sample rate.” Google says that running the regenerative model on a mid-range device in real-time yields a processing latency of 90ms, which Google says is in line with other traditional speech codes.

Google says it trained Lyra with thousands of hours of audio with speakers in over 70 languages using open-source audio libraries and then verifying the audio quality with expert and crowdsourced listeners. This, if paired with the AV1 codec for video, can enable video chats even with a 56kbps dial-in modem. This is because Lyra is designed to operate on low bandwidth environments like 3kbps.

Read all the Latest News, Breaking News and Coronavirus News here

Comments

0 comment